The common factors which can affect storage infrastructure performance are the type of RAID level configured or due to enabling or disabling Cache, due to Thin LUNs provisioning, latency in Network Hops and in some cases due to misconfigured Multipathing.

Factors which might affect SAN performance

RAID Configurations

The RAID levels that usually cause the most concern from a performance perspective are RAID5 and RAID6 which is why many DB administrators request SAN volumes which are RAID5 or RAID6. Parity-based RAID schemes, such as RAID 5 and RAID 6, perform differently than other RAID schemes such as RAID 1 and RAID 10. This is due to a phenomenon known as the write penalty.

Also Read: Types of RAID Levels

Small-block writes are relatively hard work for RAID 5 and RAID 6 because they require changes to be made within RAID stripes, which forces the system to read the other members of the stripe to be able to recompute the parity. In addition, random small-block write workloads require the R/W heads on the disk to move all over the platter surface, resulting in high seek times. The net result is that lots of small-block random writes with RAID 5 and RAID 6 can be slow. Even so, techniques such as redirecton write or write-anywhere filesystems and large caches can go a long way to masking and mitigating this penalty.

Cache

Cache is the magic ingredient that has just about allowed storage arrays based on spinning

Also Read: The next generation RAID techniques

However, not all workloads benefit equally from having a cache in front of spinning disks. Some workloads result in a high cache-hit rate, whereas other don’t. A cache hit occurs when I/O can be serviced from cache, whereas a cache miss requires access to the backend disks. Even with a large cache in front of your slow spinning disks, there will be some I/Os that result in cache misses and require use of the disks on the backend.

These cache-miss I/Os result in far slower response times than cache hits, meaning that the variance (spread between fastest and slowest response times) can be huge, such as from about 2 ms all the way up to about 100 ms. This is in stark contrast to all-flash arrays,

where the variance is usually very small. Most vendors will have standard ratios of disk capacity to cache capacity, meaning that you don’t need to worry so much about how much cache to put in a system. However, these vendor approaches are one-size-fits-all approaches and may need tuning to your specific requirements.

Also Read: Importance of Cache technique in Block Based Storage Systems

Also Read: Importance of Cache technique in Block Based Storage Systems

Thin LUNs

Thin LUNs work on the concept of allocating space to LUNs and volumes on demand. So on day one when you create a LUN, it has no physical space allocated to it. Only as users and applications write to it is capacity allocated. This allocate-on-demand model can have an impact in two ways:

- The allocate-on-demand process can add latency.

- The allocate-on-demand process can result in a fragmented backend layout.

The allocate-on-demand process can theoretically add a small delay to the write process because the system has to identify free extents and allocate them to a volume each time a write request comes into a new area on a thin LUN. However, most solutions are optimized to minimize this impact.

Also Read: Storage Provisioning and Capacity Optimization Techniques

Also Read: Storage Provisioning and Capacity Optimization Techniques

workloads. If users suspect a performance issue because of the use of thin LUNs, perform

representative testing on thin LUNs and thick LUNs and compare the results.

Network Hops

Within the network, FC SAN, or IP, the number of switches that traffic has to traverse has an impact on response time. Hopping across more switches and routers adds latency, often referred to as network-induced latency. This latency is generally higher in IP/Ethernet networks where store-and-forward switching techniques are used, in addition to having the

Also Read: The need for a Converged Enhanced Ethernet (CEE) Network

Multipathing

If one path fails, another takes over without the application or user even noticing. However, MPIO can also have a significant impact on performance. For example, balancing all I/O from a host over two HBAs and HBA ports can provide more bandwidth than sending all I/O over a single port. It also makes the queues and CPU processing power of both HBAs available. MPIO can also be used to balance I/O across multiple ports on the storage array too. Instead of sending all host I/O to just two ports on a storage array, MPIO can be used to balance the I/O from a single host over multiple array ports, for example, eight ports. This can significantly help to avoid hot spots on the array’s front-end ports, similar to the way that wide-striping avoids hot spots on the array’s backend.

Standard SAN Performance Tools

Perfmon

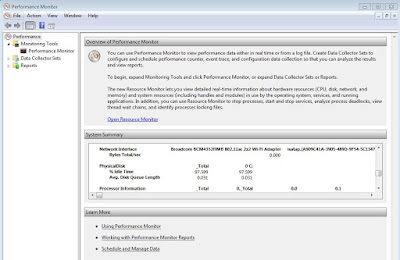

Perfmon is a Windows tool that allows administrators to monitor an extremely wide variety of hostbased performance counters. From a storage perspective, these counters can be extremely useful, as they give you the picture as viewed from the host. For example, latency experienced from the host will be end-to-end latency, meaning that it will include host-based, network-based, and array-based latency. However, it will give you only a single figure, and it won’t break the overall latency down to host-induced latency, network-induced latency, and array-induced latency.

For example, Open the Windows perfmon utility by typing perfmon at the command prompt of the

Run dialog box.

iostat

Iostat is a common tool used in the Linux world to monitor storage performance. For example run

iostat -x 20

The following output shows the I/O statistics over the last 20 seconds:

Previous: Introduction to the key Storage Management Operations

Go To >> Index Page

Previous: Introduction to the key Storage Management Operations

Go To >> Index Page

What Others are Reading Now...

0 Comment to "13.3 Factors affecting SAN performance"

Post a Comment